Cómo construir un rascador y descargar un archivo con Puppeteer

Mihnea-Octavian Manolache on Apr 25 2023

If you are into web scraping and you’re using Node JS, then you most likely heard of Puppeteer. And you definitely came across a task that required you to download file with Puppeteer. This is, indeed, a recurring task in the scraping community. But it isn’t well documented by the Puppeteer documentation.

Fortunately, we’ll take care of it together. In this article, we are going to discuss file downloads in Puppeteer. There are two goals I want us to touch today:

- Have a solid understanding of how Puppeteer handles downloads

- Create a working file downloading scraper using node and Puppeteer

By the end of this article, you will have acquired both the theoretical and practical skills that a developer needs to build a file scraper. If this project sounds as exciting as it sounds to me, let us get going!

Why download file with Puppeteer?

There are many use cases for a file scraper and StackOverflow is full of developers looking for answers on how to download files with puppeteer. And we need to understand that files include images, PDFs, excel or word documents, and many more. You can see why all these can provide very important information to someone.

For example, there are businesses in the dropshipping industry that rely on images scraped from external sources, such as marketplaces. Another good example of a file downloading scraper’s use case is for companies that monitor official documents. Or even small projects. I myself have a script that downloads invoices from a partner’s website.

Now when it comes to using Puppeteer to download files, I find that most people chose it for mainly two reasons:

- It’s designed for Node JS, and Node JS is one of the most popular programming languages, both for the front end and back end.

- It opens a real browser and some websites rely on JavaScript to render content. Meaning you wouldn't be able to download the files using a regular HTTP client that is unable to render JavaScript files.

How Puppeteer handles file downloading

To understand how to download files with Puppeteer, we have to know how Chrome does it too. That is because, at its core, Puppeteer is a library that ‘controls’ Chrome through the Chrome DevTools Protocol (CDP).

In Chrome, files can be downloaded:

- Manually, with a click of a button for example

- Programmatically, through the Page Domain from CDP.

And there is also a third technique used in web scraping. Namely, it integrates one new actor: an HTTP client. This way, the web scraper gathers `hrefs` of the files and then an HTTP client is used to download the files. Each option has its particular use cases and so, we’ll explore both ways.

Download files in Puppeteer with a click of a button

In the fortunate case that the website you want to scrape files from uses buttons, then all you need to do is simulate the click event in Puppeteer. The implementation of a file downloader is pretty straightforward in this scenario. Puppeteer even documents the Page.click() method and you can find more information here.

Since this is ‘human like’ behavior, what we need to do is:

- Open up the browser

- Navigate to the targeted webpage

- Locate the button element (by its CSS selector or xPath for example)

- Click the button

There are only four simple steps we need to implement in our script. However, before we dive into coding it, let me tell you that just like your day-to-day browser, the Chrome instance controlled by Puppeteer will save the downloaded file in the default download folder, which is:

- \Users\<username>\Downloads for Windows

- /Users/<username>/Downloads for Mac

- /home/<username>/Downloads for Linux

With this in mind, let’s start coding. We’ll assume we’re astrophysicists and we need to gather some data from NASA, that we’ll process later on. For now, let’s focus on downloading the .doc files.

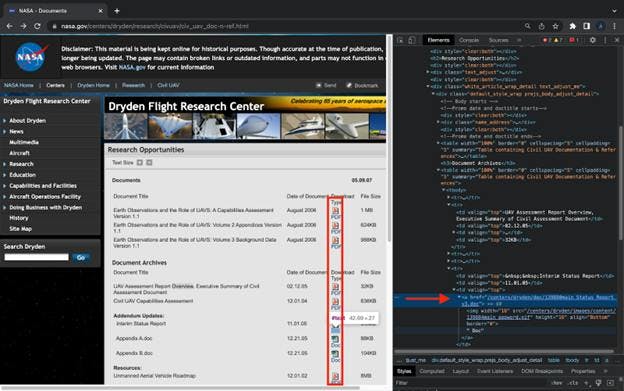

#1: Identify ‘clickable’ elements

We’ll navigate to NASA’s domain here and inspect the elements of the page. Our focus is to identify ‘clickable’ elements. In order to find the elements, open up Developer Tools (Command + Option + I / Control + Shift + I in Chrome):

#2: Code the project

import puppeteer from "puppeteer"

(async () => {

const browser = await puppeteer.launch({ headless: false })

const page = await browser.newPage()

await page.goto('https://www.nasa.gov/centers/dryden/research/civuav/civ_uav_doc-n-ref.html',

{ waitUntil: 'networkidle0' })

const tr_elements = await page.$x('html/body/div[1]/div[3]/div[2]/div[2]/div[5]/div[1]/table[2]/tbody/tr')

for (let i = 2; i<=tr_elements.length; i ++) {

const text = await tr_elements[i].evaluate(el => el.textContent)

if (text.toLocaleLowerCase().includes('doc')) {

try {

await page.click(`#backtoTop > div.box_710_cap > div.box_710.box_white.box_710_white > div.white_article_wrap_detail.text_adjust_me > div.default_style_wrap.prejs_body_adjust_detail > table:nth-child(6) > tbody > tr:nth-child(${i}) > td:nth-child(3) > a`)

}catch {}

}

}

await browser.close()

})()

What we are doing here is to:

- Launch Puppeteer and navigate to our targeted website

- Select all `tr` elements, which hold the `href` we want to click later

- Iterate through the tr elements and

a. Check if the text inside the element contains the word ‘doc’

b. If it does, we build the selector and click the element - Close the browser

And that is it. Downloading files with Puppeteer can be as simple as it gets.

Download files in Puppeteer with CDP

I know the default downloads folder is not a big problem for small projects. For bigger projects, on the other hand, you will surely want to organize the files downloaded with Puppeteer in different directories. And that is where CDP comes into play. To achieve this goal, we will keep the current code and only add to it.

The first thing one can think of is resolving the path to the current directory. Luckily, we can use the built-in node:path module. All we need to do is import the `path` module into our project and use the `resolve` method, as you will see in just a moment.

The second aspect is setting the path in our browser using CDP. As I said before, we’ll use the Page Domain's `.setDownloadBehavior` method. Here is what our updated code looks like with the two added:

import puppeteer from "puppeteer"

import path from 'path'

(async () => {

const browser = await puppeteer.launch({ headless: false })

const page = await browser.newPage()

const client = await page.target().createCDPSession()

await client.send('Page.setDownloadBehavior', {

behavior: 'allow',

downloadPath: path.resolve('./documents')

});

await page.goto('https://www.nasa.gov/centers/dryden/research/civuav/civ_uav_doc-n-ref.html',

{ waitUntil: 'networkidle0' })

const tr_elements = await page.$x('html/body/div[1]/div[3]/div[2]/div[2]/div[5]/div[1]/table[2]/tbody/tr')

for (let i = 1; i<=tr_elements.length; i ++) {

const text = await tr_elements[i].evaluate(el => el.textContent)

if (text.toLocaleLowerCase().includes('doc')) {

try {

await page.click(`#backtoTop > div.box_710_cap > div.box_710.box_white.box_710_white > div.white_article_wrap_detail.text_adjust_me > div.default_style_wrap.prejs_body_adjust_detail > table:nth-child(6) > tbody > tr:nth-child(${i}) > td:nth-child(3) > a`)

} catch {}

}

}

await browser.close()

})()

Here is what we’re doing with the added code:

- We are creating a new CDPSession to ‘talk raw Chrome Devtools Protocol’

- We are emitting the `Page.setDownloadBehavior` event where

a. `behavior` is set to `allow` downloads

b.`downloadPath` is built with `node:path` to point to the folder where we’ll store our files

And that is all you have to do if you want to change the directory in which to save files with Puppeteer. Moreover, we have also achieved our goal of building a file downloading web scraper.

Download file with Puppeteer and Axios

The third option that we’ve discussed is to gather links to the targeted sites and use an HTTP client to download them. I personally prefer axios, but there are also alternatives to it when it comes to web scraping. So, for the purposes of this tutorial, I am going to use axios and assume we’re building a scraper for images of auctioned cars.

#1: Collect the links to our files

const get_links = async (url) => {

const hrefs = []

const browser = await puppeteer.launch({ headless: false })

const page = await browser.newPage()

await page.goto(url, { waitUntil: 'networkidle0' })

const images = await page.$$('img')

for (let i = 1; i<=images.length; i ++) {

try {

hrefs.push(await images[i].evaluate(img => img.src))

} catch {}

}

await browser.close()

return hrefs

}I am sure that by now you’re already familiar with the Puppeteer syntax. As opposed to the scripts above, what we’re doing now is evaluating `img` elements, extracting their source url, appending it to an array, and returning the array. Nothing fancy about this function.

#2: Save files with axios

const download_file = async (url, save) => {

const writer = fs.createWriteStream(path.resolve(save))

const response = await axios({

url,

method: 'GET',

responseType: 'stream'

})

response.data.pipe(writer)

return new Promise((resolve, reject) => {

writer.on('finish', resolve)

writer.on('error', reject)

})

}This function is a bit more complex, as it introduces two new packages: `fs` and `axios`. The first method from the `fs` package is pretty much self explanatory. What it does is create a writable stream. You can read more about it here.

Next, we use axios to ‘tap’ into the server’s URL, and we let axios know that the response is going to be of type ‘stream’. Lastly, since we’re working with a stream, we’re going to use `pipe()` to write the response to our stream.

Having this set-up, all there is left to do is to combine the two functions into a runnable program. To do so, simply add the following lines of code:

let i = 1

const images = await get_links('https://www.iaai.com/Search?url=PYcXt9jdv4oni5BL61aYUXWpqGQOeAohPK3E0n6DCLs%3d')

images.forEach(async (img) => {

await download_file(img, `./images/${i}.svg`)

i += 1

})

Conclusiones

Puppeteer’s documentation may be unclear about file downloading. Despite that, today we discovered quite a few ways of implementing it. But note that scraping files with Puppeteer is not really an easy task. You see, Puppeteer launches a headless browser and these usually get blocked really quickly.

If you’re looking for a stealthy way of downloading files programmatically, you may turn your attention to a web scraping service. At Web Scraping API we’ve invested a lot of time and effort into hiding our fingerprints so that we don’t get detected. And it shows real results in our success rate. Downloading files using Web Scraping API can be as easy as sending a curl request, and we’ll take care of the rest.

This being said, I really hope today’s article helped you in your learning process. Moreover, I hope we’ve checked both of our initial goals. Starting now, you should be able to build your own tools and download files with Puppeteer.

Noticias y actualidad

Manténgase al día de las últimas guías y noticias sobre raspado web suscribiéndose a nuestro boletín.

Nos preocupamos por la protección de sus datos. Lea nuestra Política de privacidad.

Artículos relacionados

Aprenda a scrapear sitios web dinámicos con JavaScript utilizando Scrapy y Splash. Desde la instalación hasta la escritura de una araña, el manejo de la paginación y la gestión de las respuestas de Splash, esta completa guía ofrece instrucciones paso a paso tanto para principiantes como para expertos.

Conozca cuál es el mejor navegador para eludir los sistemas de detección de Cloudflare mientras hace web scraping con Selenium.

Descubra cómo extraer y organizar datos de forma eficaz para el raspado web y el análisis de datos mediante el análisis sintáctico de datos, las bibliotecas de análisis sintáctico HTML y los metadatos de schema.org.